Software Quality Management Best Practices | 5 Do's & Don'ts

Achieving optimal software reliability and quality management processes sit at the core of a memorable digital experience.

Quality management in software can be summarized in two points:

-

Developers don’t take the full blame for buggy code.

-

Testing isn’t a release bottleneck – you’re just doing it wrong.

Stakeholders and management always want their digital products to successfully launch. Software testing is normally seen as rejecting builds and stretching out the delivery date.

Why is that?

Not doing testing activities in parallel with development opens up for bugs to go from minor to critical loopholes. With an endless list of tickets to prioritize and resolve, software projects easily create frustrated teams and unreleasable apps. A tested and reliable software is a production-ready software. Read these 5 do’s and don’ts of Agile software quality management and implement the best practices to enable customer satisfaction at every release.

5 Do’s of Software Quality Management

1. Write testable code: test first, not last

Quality, maintainability, and fewer bugs are what every technical lead wants for their team’s codebase. Conveniently, test-driven development (TDD) offers just that.

The philosophy lives on the results of automated tests: green means it’s working, red means it’s not. TDD starts the development process by writing tests first before coding. Aiming to outline the possibilities in which the code will fail, TDD has become popular among developers in:

-

Forecasting failures

-

Naturally infusing quality into code

-

Reducing overall code maintenance

-

Meeting test requirements and standards

-

Refactoring

-

Documentation

-

Decreasing the number of bugs over time

Of course, the level of caution that comes with applying TDD will definitely lengthen the delivery cycle. Projects that are active only within a short period of time might not be the best fit for this methodology. Yet teams that have to uphold and keep software functioning for longer periods of time will undeniably benefit from maintainable code as their app grows.

Project managers, sadly, will have to haggle with stakeholders and clients. If we bake in quality from the start, there’ll be fewer failures to deal with in the future.

2. Automate regression testing

Repetitive testing types are the best candidate for automated testing. Code changes – regardless of their criticality – always need to be regression-tested.

Sadly, the reality of it is regression testing is still done manually. Regression testing is all about wiring up both new and previously developed test suites to run on demand. Teams normally run into time constraints when the majority of their time is spent on testing existing functionalities. Whereas the areas that need human efforts the most, like exploratory testing to find edge cases, are often rushed through.

Automated flows enable teams to quickly filter out necessary tests to run without having to reinvent the wheel and rewrite every test case. Test suites can be scheduled to run overnight and return feedback on what’s needed to be fixed the morning after.

Making sure apps work across browsers, mobile devices and operating systems is also less of a hassle. Most automated testing tools offer the capability of choosing multiple environments at once.

3. Low-cost cloud environments for quality management in software

Optimal testing coverage is associated with having physical machines in hand. Are you testing on a macOS, Windows or the different versions of macOS and Windows? Each and every one of them is a separate machine to test.

Here’s an example of how cloud environments fit into Spotify’s DevOps engineering culture.

New ideas and experimentations are shipped here and there, purposed to harness the user experience as a competitive edge. Build time in full DevOps teams needs a strategy to stay short. Shipping fast means beating competitors and getting hands on the latest technology innovations.

It doesn't take long for electronics manufacturers like Apple or Google to release their latest models. For software projects, this means getting timely access to those devices and make sure your digital products work on them. Subsequently, testing costs will surely incur from having to purchase every new machine.

Cloud test environments address this by offering a subscription-based model, granting users access to a wide array of machines, including the newest ones. All teams will have to do is wait for the solution vendor’s development team to announce the environment availability and start testing.

Not every engineering team has a complicated, interconnected web music player like Spotify. For a small startup, costs also pose an issue. Teams with lower application complexity – say a single-page web app for cooking recipes – do not have the need to invest millions worth of dollars into acquiring 50 laptops. An upfront payment that high simply doesn’t make sense as the business would need to allocate financial resources to other areas as well.

This is especially true for businesses in the early stages of development, where first impressions and building trust with end-users is a key sales driver. In this scenario, cloud environments bring a more affordable and budget-friendly solution to end-to-end test coverage.

Additionally, security is also a matter seen at scale. Organizations in finance, federal and state government, and healthcare and alike industries must strictly protect personal information and customer data. From HIPAA to GDPR, having a private cloud dedicated to testing activities is essential.

Cloud technologies basically morph browser, device and operating system configurations into reusable test environments. Below are the primary highlights of cloud testing:

- Multi-single and single-tenant deployment of testing applications

- Independent data centers and data residency

- Dynamic scaling to handle the different volumes of workload

- Test data backup and restoration for disaster recovery

- No maintenance overhead for deployment, hosting, infrastructure/application upgrades, and customer application support

- Quick and easy test environment setups using pre-configured cloud virtual machines

- Enterprise-level security on the cloud: custom Service Level Agreements (SLA) to cater business needs and sector-specific security standards

4. Go low-code where it feels right

Low-code/no-code/codeless testing solutions aren’t the antagonists to make coding professions obsolete.

The targeted users for low-code platforms, in general, are still technical personnel – and this stays true for quality engineering. Low-code testing tools are best used when teams have a mix of both testing and programming experiences. It’s quite uncommon for a team to have all senior members. Learning curves will exist, where the common practice is letting freshers learn from the work of those that are more experienced.

From the point of view of a manual tester or those that have just stepped into the realm of automation and Agile, learning the craft takes time. Generally, the developer or automated QE in charge will be the one creating the keywords, test cases and profiles. These test artifacts are then shared with the rest of the team, allowing them to slowly build tests and learn how a codebase works.

Developers-wise, low-code means staying productive with less code to write.

Imagine not having to press reset and rewrite test code for every release, feature or code change. Instead, the low-code/codeless movement helps developers to solely focus on maintaining test suites and custom code for advanced scenarios. Even when the team has to develop new tests, the productivity increase is undeniable when artifacts can be reused and repurposed.

Tip: Record-and-playback is among the easiest methods to create tests through interacting with an app’s UI. Right when the frontend has been built, quality engineers can jump in, start writing automated UI tests and avoid testing bottlenecks. Have your quality engineers try it out and see how easy it is to start testing in minutes.

Learn Katalon Studio: Create Automated Tests with Record and Playback

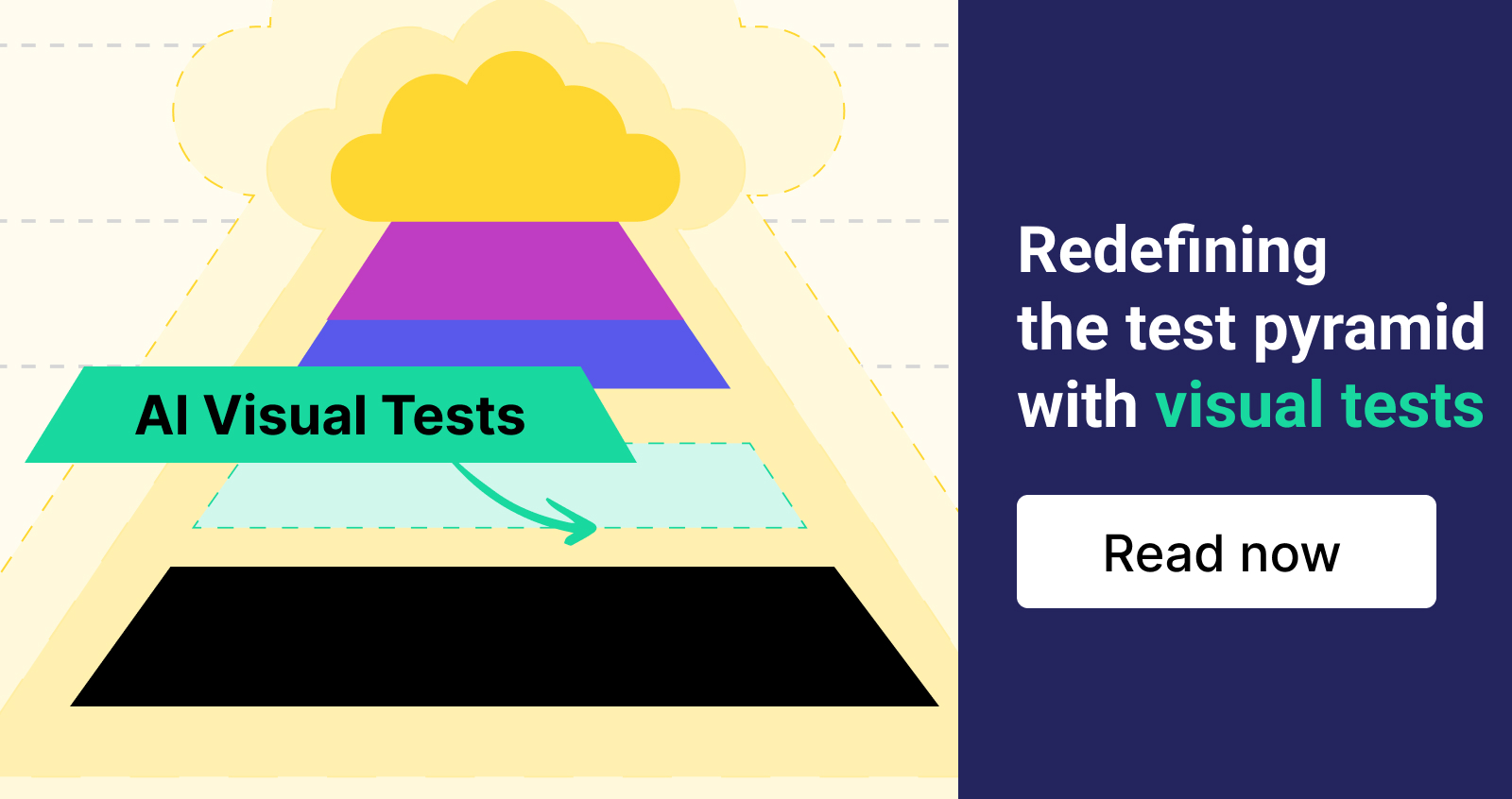

5. Always plan and have a testing strategy: the testing pyramid

The testing pyramid features the three types of testing, their costs and frequency level. At the top is end-to-end (E2E) testing, also known as UI testing.

Source: Semaphore

End-to-end tests are entirely automated to replicate an intricate course of actions an end-user would take on a specific scenario.

The system-under-test (SUT) needs to reach a definite level of app completeness before end-to-end tests are triggered to run. This consists of clicking on buttons, inputting values and expecting the app to show the appropriate data. As its position on the pyramid suggests, automated end-to-end tests are the heaviest in test steps and time to write/run.

On the aspect of time, flaky tests, timing issues, or dynamic elements are notorious headaches that elongate the duration of end-to-end tests.

Source: Martin Fowler

Integration testing verifies an app’s behavior with databases, file systems or separate services. Calling to a third-party weather REST API or reading/writing values from/to databases are real examples of what unit tests do not cover.

On the opposite side of the spectrum, unit tests are the lightest and easiest to run. Roughly minutes is all it takes for thousands of unit tests to scan through functions, classes, methods or modules. Similar to API testing, unit tests do not have to wait until a UI is built.

In summary, the testing pyramid is a strategic pathway to understanding the scope at different testing levels. There are also other models, like the Testing Trophy or Ice Cream Cone, that adapt to different contexts.

Read more: Testing strategy in DevOps and Standardizing automation in quality processes

5 Don'ts Software Quality Management

1. Not involving stakeholders in decision-making

Time-to-market is a pressing need. Business leaders and executives often believe that time for testing equates to holdups to release – though they do not truly grasp the root cause of it.

And because of that, requirements can become unrealistic real fast and lead to burnout.

Depending on the nature of every project, product or business, the group of stakeholders involved in testing activities varies. What they have in common is the lack of technical expertise – that’s why they hire engineers to work with!). Therefore, feedback from project managers (PM), quality engineers and developers are imperative before making any call.

Let’s explore a common product release scenario.

Say there’s only a week left until the live date. The team receives a Slack message from the PM saying that a business requirement has changed. The quality engineers and developers were not a part of the meeting when this call was made. If they were, they would’ve been able to inform the team that this change requires working with a very complex section of the legacy codebase. As a result, the risks of having more regressions added could've been forecasted.

In the end, by the time the build was handed over to the testing team last minute. Worse, there isn’t enough time to run manual checks or automated test suites.

Instead of reaching out to the PM and explaining the risks they’ll be taking, the team decides to just go with it. For the client, all they hear is a pushed-back deadline. Trust is lost in the team since the change was agreed upon without any additional disclaimers.

The trap that teams often fall into is “doing what they’re told”. Acknowledge that the one with the final say is the client or management. Always provide insights from a practitioner's perspective so that every call and potential risk is informed and calculated.

2. Point fingers for software bugs

Bugs love to hide and act up when the final product reaches the end-users. Regardless of the developers' level of seniority or how intensive code reviews are, there will always be instances that teams can’t foresee.

There isn’t an all-encompassing list of why software bugs exist; However, the most common ones are:

-

Minimal testing processes. Writing non-testable code or only testing on popular platforms (browsers, devices, OS) is a recipe for disaster. Not shifting left and testing earlier usually results in delays, which is expected when quality engineers are the only ones responsible for software quality.

-

Requirements guesswork. Clients and business stakeholders have big hopes. Sadly, these visions don’t instantly translate into realistic requirements, specifications and acceptance criteria for software teams. As a result, high-level visions can be misinterpreted and yield a product that stakeholders do not want.

-

Complex legacy systems. Legacy systems are basically a massive codebase built using an older framework, language or a blend of coding approaches. Often referred to as “code that works but no one knows why”, long-developed systems are prone to introducing bugs and technical debt.

-

Developers are human. Coding typos like writing “==” instead of “=” also result in errors. Most IDEs, compilers and static analysis tools today cover these things, but better coding practices and writing maintainable code are more ideal long-term solutions.

-

Changes break things. The closer teams get to the launch date, the risker code changes will be. One common example bug type is regression bugs, where any new commits could damage or break existing functionalities nearby.

Plus, aiming to write bug-free code isn’t as good as it sounds. Small-sized teams might be able to afford the slower rate of development, but clients and larger teams won’t. Bug tickets span across Backlog – To Do – In Progress – Done, where making the decision to fix them or not depends on:

- The bug severity level

- How likely end-users will see them

- How difficult it will be to reproduce and resolve

- What’s the return of fixing them versus not

3. Stay armed with the wrong technologies: automation testing framework vs. tools

Whether it’s AI, autonomous or automated testing, selecting the right tools means understanding what a team needs. The team-fit factor plays a huge role in establishing a sturdy foundation for the project.

Many falsely perceive that being “free” means cost-saving. Testing frameworks are great for teams that are long established and have gone through the years of finding the best talent to code and build software. But, their coding expertise is what makes building and maintaining a framework less of a hassle. On top of this, they’ll also be dedicated to creating test artifacts for the rest of the team to utilize.

Yet, not everyone has this abundant resource of dedicated and experienced developers. Or even if they do, the added framework maintenance and testing efforts will push them to their limits. Teams with more unseasoned members will require the majority of their time spent on practicing testing. This means understanding and mastering tools quickly, and more time to learn about the application and applying the right testing techniques.

Here are a few questions to consider for the tool evaluation and proof-of-concept process:

- How advanced are manual and automation QE at coding/scripting automated tests? Where are the areas that developers will have to participate in?

- What is the total optimal automation percentage of manual tests?

- Are native integrations available for test management, bug tracking and other agile testing tools?

- How many application types is the team dealing with (e.g., web/mobile/desktop apps, APIs)

Read more: Agile Testing Methodology: Processes & Leading Practices

4. Don’t silo developers and quality engineering

With agility as an imperative factor, teams must be able to adopt good collaboration practices.

The Waterfall model has a bad reputation for leaving members separated: developers code on their own and hand builds over to test near the end of a sprint. In a wider view, testing and development are not done in parallel.

Bottlenecks are often a result of perceiving software testing as detection rather than mitigation. Bugs grow. They accumulate as newer code and dependencies are developed.

To truly immerse in Agile, the involvement of quality engineers need to be present from the very start. Software testing isn’t the final quality gate to just check off before further edits. Sessions such as requirements planning, acceptance criteria refinement and sprint planning are pivotal.

Quality engineers and developers can easily foster a mutual knowledge about the system-under-test and yield quality work products. An easy-to-understand example is reducing the severity of code change by defining areas that are prone to change and setting up automated test suites in advance.

A different angle to look at siloes is single-point testing tools and quality management platforms. For example, API testing tools like Postman are specialized for API testing and quality management.

You can’t expect to use the same tool for UI testing or load testing.

A common path that teams would take is adopting more tools to test other areas of software. Similarly, many run into the issue of creating tests in one place, executing them somewhere else, then connecting to a third-party extension for reporting.

The resulting silo is scattered test data and an incomplete picture of software quality. A software quality management platform is a modern and comprehensive solution to tackle these. For testing, quality management platforms provide an all-in-one automated testing IDE to author, run, analyze and maintain test suites. They keep every team member involved by providing integrations to all sorts of critical version controls, test management, CI/CD and DevOps tools.

5. Manual vs. automated testing: picking sides

Just like low-code/codeless solutions, automation isn’t the miracle that solves all of our problems. Balancing between manual and automated testing asks for a proper plan and detailed thought process.

The detailed guide to manual to automated testing can be found in the [Free Ebook] Manual to Automated Testing.

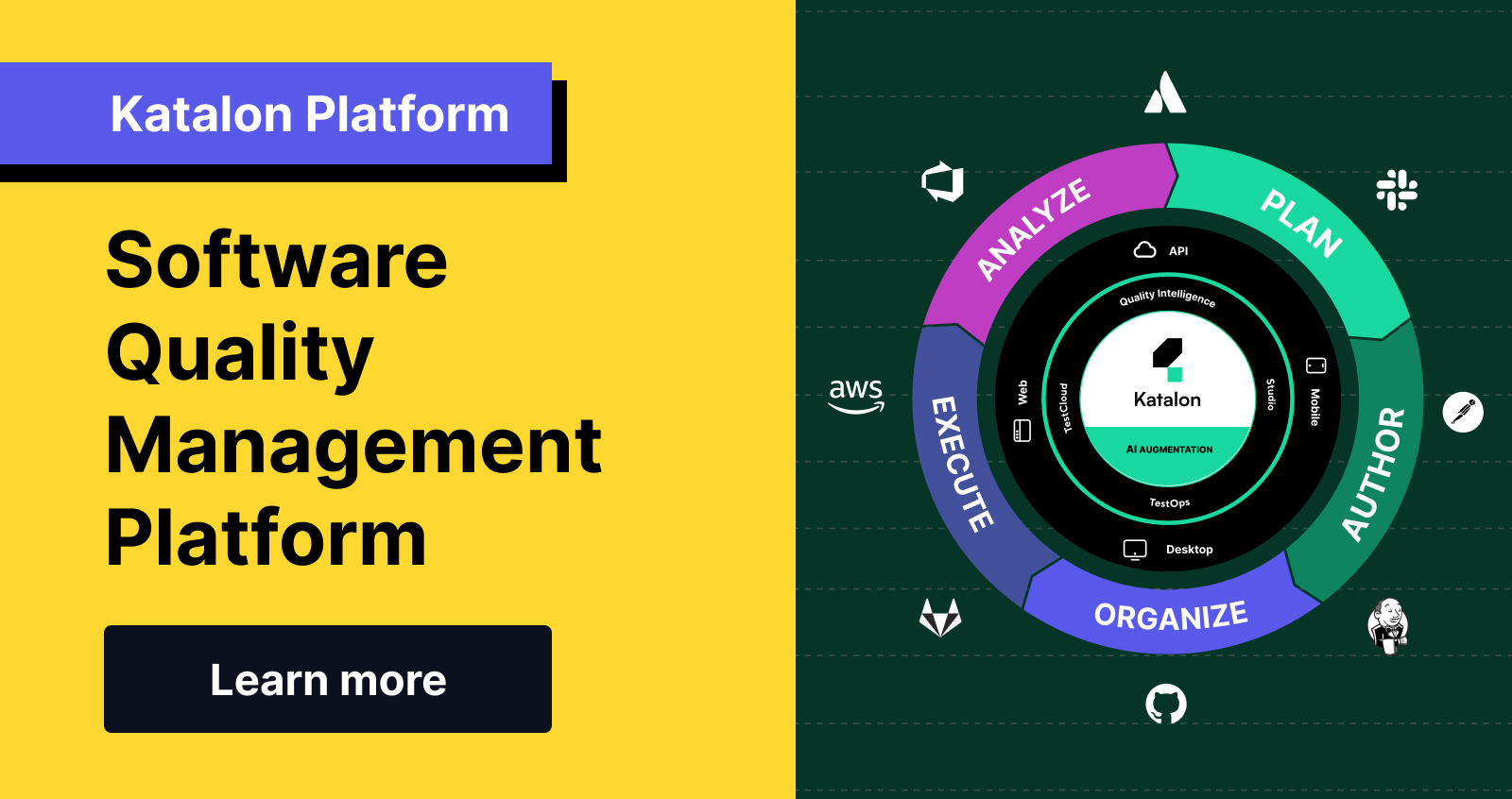

Katalon Software Quality Management Platform

Katalon is a modern and comprehensive software quality management platform. Aiming to help software teams deliver digital experiences that win, the Katalon platform simplifies API and UI automated testing processes for web, mobile and desktop apps. The platform delivers:

-

Free-forever tier: Making testing easier but also accessible has always been, and will always be, our mission. Katalon can be your trusted tool to practice test automation for free – but with all the necessities to test apps.

-

One workspace for testing: No jumping around from tool to tool. Plan, author, organize, execute and analyze automated tests, and manage your entire software quality process on a single platform.

-

Scale testing, scale automation: Katalon can meet the testing demands of teams of any size. Test on SaaS, on-prem, the private cloud testing infrastructures or all three.

-

Manage manual and automated testing: Connect your favorite test management and manual testing tools. Map manual test results to Katalon tests for easy traceability of test coverage.

-

On-demand test environments: Costly physical machines and devices aren’t your only options to get maximum test coverage and compatibility. Cloud browsers, mobile devices and OSs enable test execution to run in parallel, across various test environments.

-

Real-time picture of software quality: From detailed test logs to a high-level view on release readiness, Katalon syncs all test data across VCS, ALMs and CI/CD to help you make data-backed decisions for every launch. Slack or Teams notifications on test results are also available.

-

Built-in AI: No additional configurations for access to AI-powered innovation: similar test failures, self-healing, test flakiness rate and more.